Project update 9 of 27

Power and Stability Comparison

Hi everyone,

So we got great news from one of our Alpha testers:

- Infinitely better stability and

- 1W less power use

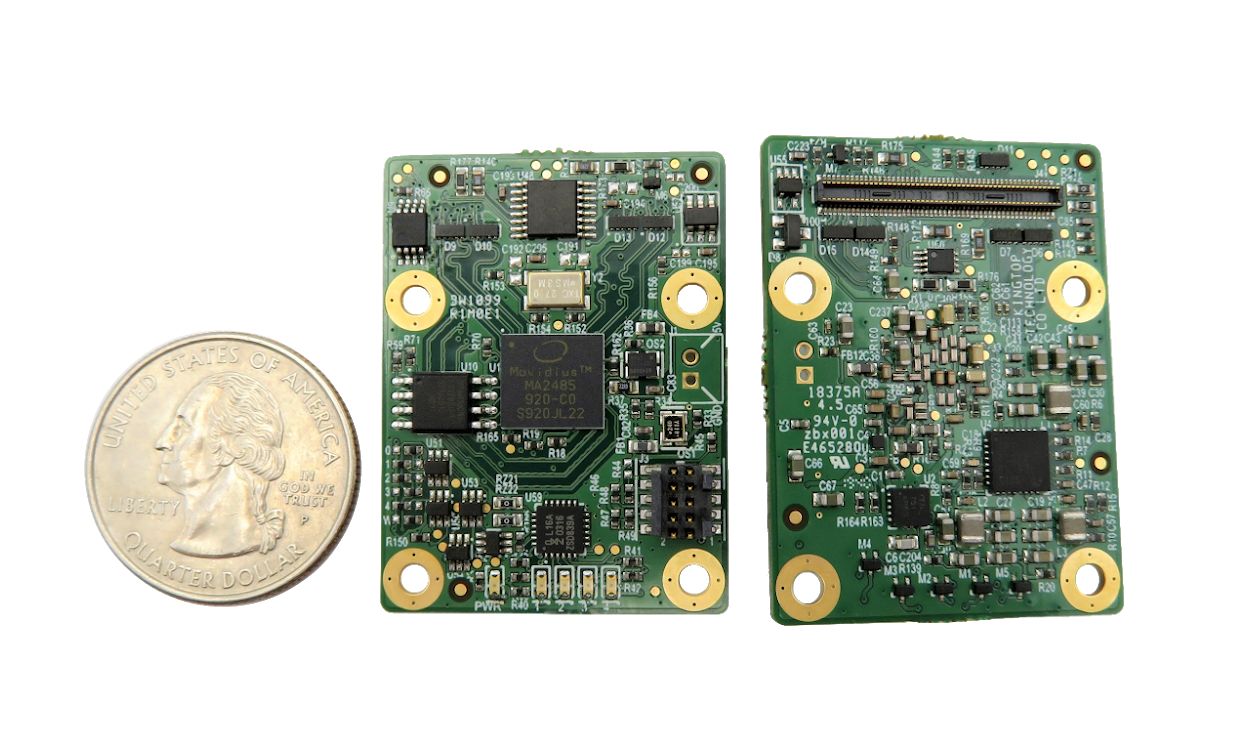

They are using the DepthAI: System on Module (SoM) in their own custom board.

To de-risk the development of their custom board, they implemented the board to support our SoM and also the incumbent Myriad X PCIE board (the manufacturer of which will rename nameless here).

What did they find?

Running the exact same neural inference over USB3, our module uses 1W (yes, 1 Watt!) less power than the incumbent board. They also had to work around instability with the incumbent board, where it would regularly hard-crash, requiring full-reboot of their host Linux system.

This crash would manifest in two ways:

First, if they started performing full-bore neural inference within 1 minute of system powerup, it would crash almost every time.

To solve that, they implemented a delay running neural inference until 1 minute after startup (which was materially detrimental to their use case).

But worse, even with this delay, the system would randomly crash within a couple hours, requiring a full system reboot.

This was killing them.

They spent a long time debugging it, assuming that this was an error on their custom hardware design or software flow.

It surely couldn’t be the fault of this large not-to-be-named OEM who made the incumbent Myriad X PCIE card, right?!

Eventually they gave up on this incumbent PCIE board and swapped in our DepthAI SoM in its place.

Zero crashes, ever.

To this date they haven’t seen a single crash with DepthAI, where they were seeing them hourly with the incumbent!

So in the end; 1W less power, and infinitely better stability. And this is for the Alpha version of DepthAI, literally the very first boards we ordered (and we’re on board order 6 now, with improvements each time).

We were super excited to hear this, and figured you all would be too.

Best,

Brandon & the Luxonis team.