Project update 8 of 20

Field Report: Create Private Cloud Infrastructure with Ten64

by ThomasHave you ever gotten tired of the numerous devices making up your home IT infrastructure? I sure have! Like many others, I was running a cable modem, a router, and various application servers. All of them were consuming space and electricity despite being idle most of the time. Since commercial residential gateways are becoming increasingly powerful, we are seeing more and more vendors aggregating services into the gateway. While this allows the use of the otherwise wasted CPU power of the gateway, it also bears major security risks. Without any isolation measures, a security flaw in the routing system will immediately compromise the running services and vice versa. This holds especially true when multiple services need be accessible from the WAN (e.g., own-/nextcloud, web-services, git, etc.) each of which increases the attack surface. An elegant way to mitigate these threats is to virtualize and/or containerize the individual systems on common hardware. Unfortunately, most commercial (embedded) systems do not support virtualization and are not powerful enough to reasonably run services inside containers.

Fortunately, the Ten64 provides a perfect solution to this problem. Not only is the 8-core LS1088A more than powerful enough to run plenty of services in parallel, its arm64 architecture also allows for hardware virtualization. The inherent need for large amounts of RAM for the guest systems can be met by the SODIMM slot that supports up to 32 GB ECC modules.

In addition, the LS1088A comes with DPAA2, which abstracts the the network and packet processing hardware into an object-oriented interface. This allows for a very flexible and dynamic use of the network and acceleration resources. What makes DPAA2 especially interesting is the fact that it can pass a set of network resources to a virtualized guest system. It is thus possible to assign a network interface to a guest system without the host system’s kernel ever touching the data on the wire. So, even if there is a potential security flaw in the IP stack of the host kernel, there is no access to the host. This unique feature of the LS1088A therefore adds an extra layer of security that moves the virtualized system as close to separate physical devices as possible.

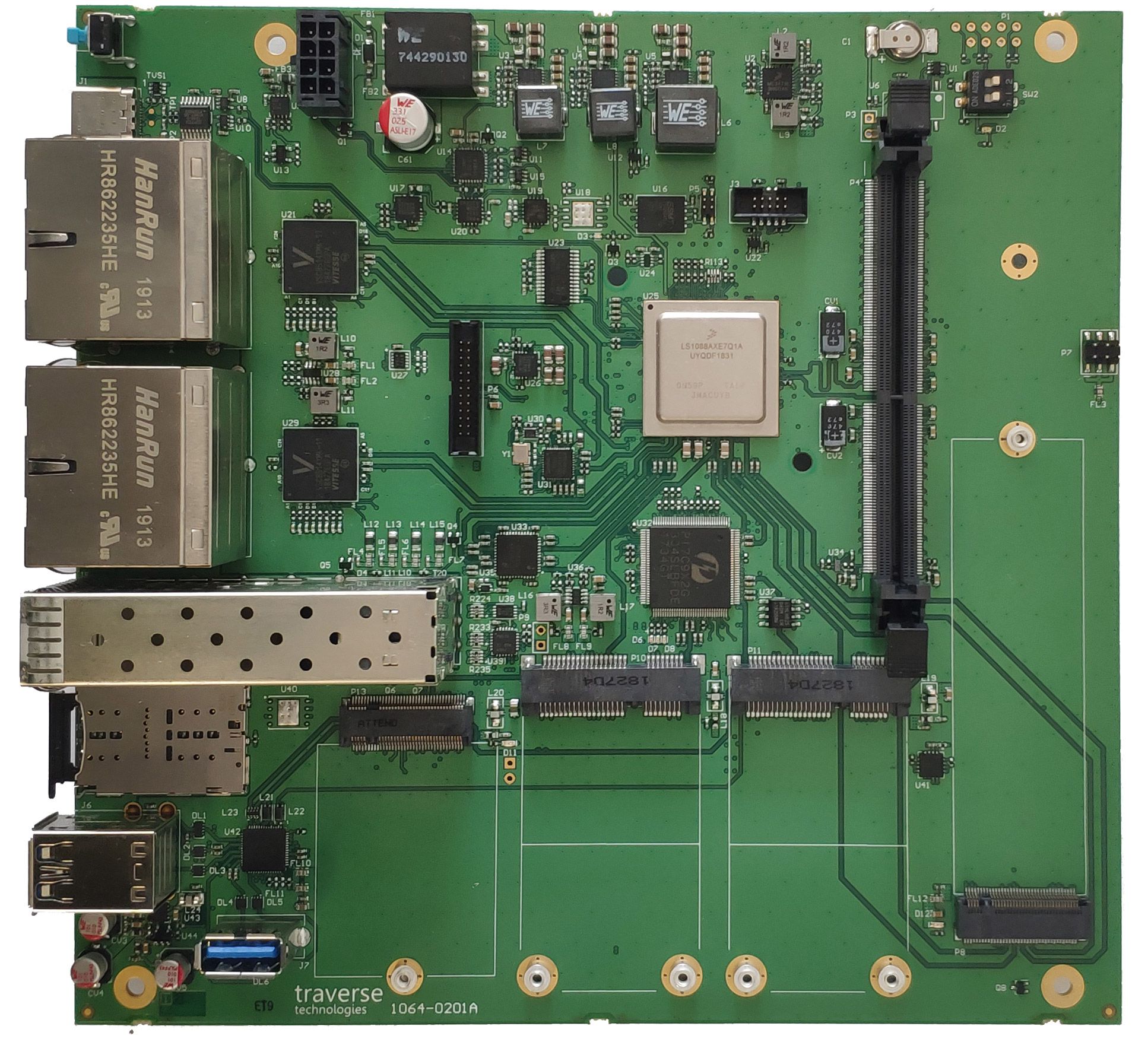

To implement my ideas, Matt provided me with a development sample of the Ten64. This was the first revision of the board, so the device shown in the photos here look different than the current revision. With 16 GB RAM, it was equipped well enough to run several VMs in parallel. It came with the OpenWrt-based μVirt preinstalled, which made it easy to get my first VM running. Unfortunately, the 4.19 LTS kernel used in μVirt did not contain kernel driver support for the DPAA2 device pass-through into the VMs. Also the version of qemu available in μVirt did not contain the necessary patches to handle the DPAA2 device pass-through.

Over several weeks of late-night work, I managed to identify the necessary patches from the NXP LSDK kernel and then port them to the OpenWrt kernel used by muvirt. For qemu, this process was even more complicated since the latest supported version in the LSDK was 2.9. Thus, I forward-ported the DPAA2 patches for qemu to major release 3. (Meanwhile, newer versions of the kernel patches and qemu are available from NXP and most patches are in the process of being mainlined.) With these changes in place, I finally managed to pass the DPAA2 resources through to my guest systems.

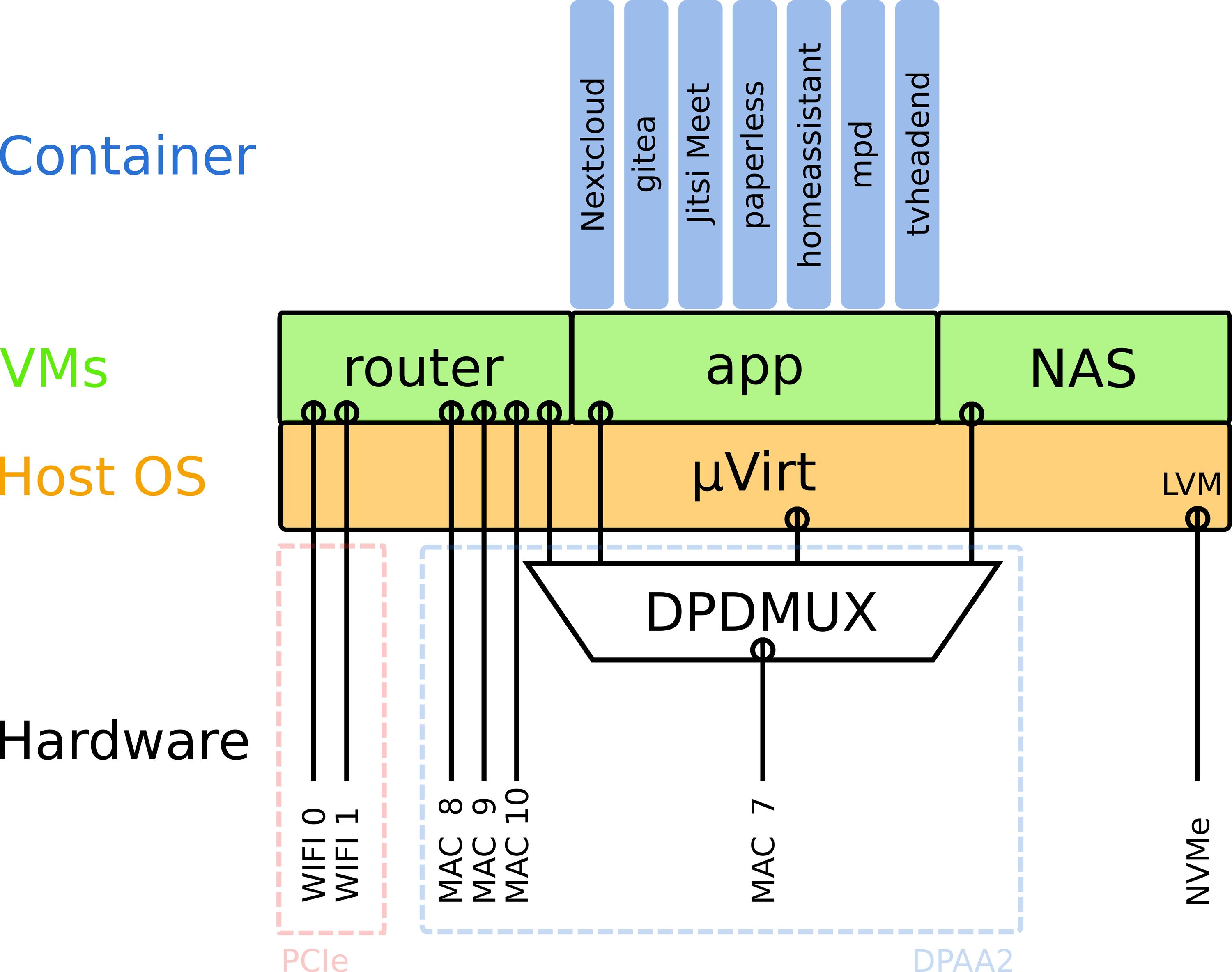

The System

The core idea was to separate the routing, the storage, and the application platform using virtualization (i.e., each system running its own kernel). The application platform in turn should isolate the individual services by means of Docker containers. The access to the hardware should be passed through to the guest systems as much as possible. Thus, I ended up with the following scheme:

The Hardware

The storage for the whole system is provided by a 1 TB Samsung 970 Evo Plus SSD in the M.2 slot. This SSD cannot be run at its full potential since only two PCIe lanes are available at the M.2 slot. However, two lanes of PCIe 3.0 still beat SATA3. The SSD is accessed by the host system and split into logical volumes by means of LVM2. This allows dynamic allocation of storage to the individual VMs. In addition to the ample potential for wired connections the Ten64 already includes, I decided to add Wi-Fi connectivity by adding the following mPCIe cards:

- COMPEX WLE1216V5-20 802.11ac wave2 4x4 MU-MIMO

- COMPEX WLE900VX 802.11ac 3x3 MIMO

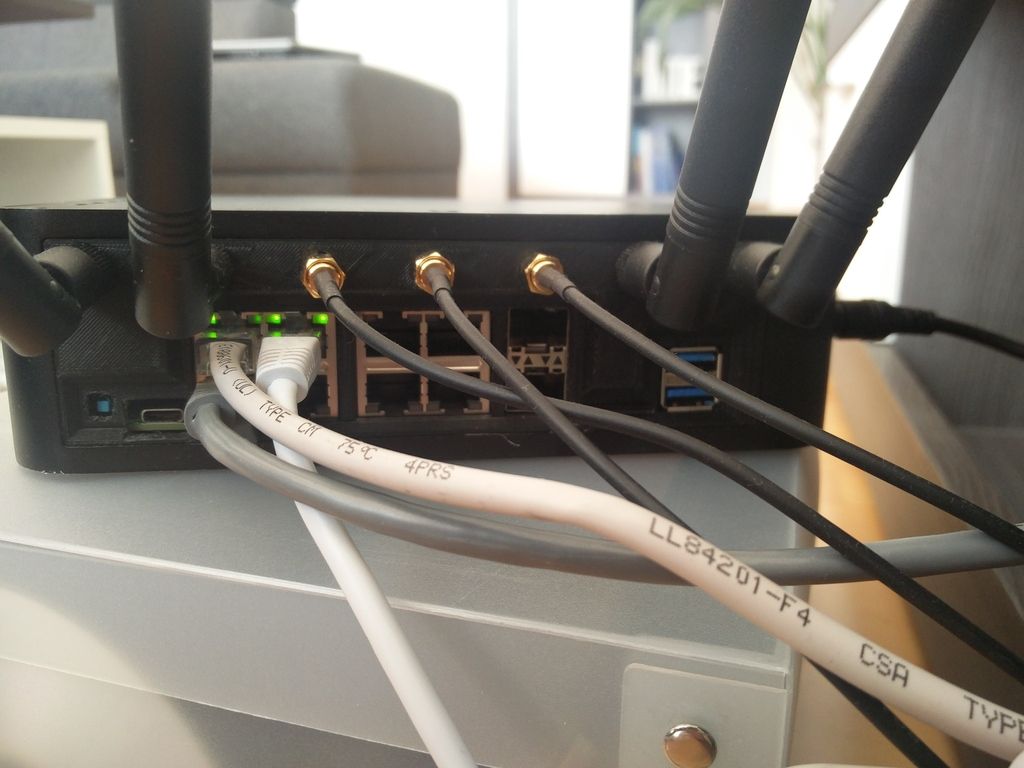

To mount/feed through the required seven antennas, I 3D-printed a rear plate for the Mini-ITX case provided by Matt.

The Software

The Ten64 is running μVirt to host the VMs. Based on OpenWrt, μVirt is very slim and leaves almost all the resources of the system to the VMs. Since PCI pass-through is a well-established technique in qemu, it was straightforward to directly pass these devices to the router VM. In μVirt it is as easy as finding the ID of your PCI device with lspci and adding list pcidevice '<ID>'to the VM’s sections of the config file. DPAA2 resources can be passed in a very similar way. As a first step, a so-called DPRC needs to be created. This is a DPAA2 container object that gathers a set of DPAA2 resources like MACs, queues, NICs, accelerators, etc. Once the DPRC is defined, it can be passed to the guest VM by specifying list dpaa2container 'dprc.<num>'in the VM’s config. If the guest kernel has support for DPAA2 devices, it can now access the network hardware.

The possibility to pass it to the guest VM is only one of the many benefits of the DPAA2 infrastructure. Another is the large potential for hardware-offloading of network I/O tasks. In my use case, the router VM has to do a lot of NAT (WAN<->LAN) and bridging (LAN). For the latter, it would be great to have the DPAA2 "switch" object that can connect several virtual and physical NICs just like a hardware switch. However, unlike its bigger brother, the LS1088 does not support the "switch" object. What is supported, however, is the DPDMUX object that can connect exactly one physical (MAC) port to an arbitrary number of virtual ports (NICs). Furthermore the DPDMUX object can be set to bridge mode so that the traffic is bridged between the virtual ports in hardware. This allows offloading the communication between the VMs to the DPAA2 hardware. Also, the network interface of the host system can be added to the DPDMUX object. This comes in quite handy when the router VM is not running. In that case it is still possible to access the host via the physical port attached to the DPDMUX.

The Guest VMs

As a router, I’m running OpenWrt (target: armvirt) with minor patches to the kernel to support DPAA2 objects inside of a VM. As a Docker host, I’m running an Alpine Linux with just the bare minimum to lauch the Docker binary. On top of the Docker host, I’m currently running 12 stacks with 26 containers to provide services like Nextcloud, HomeAssistant, gitea, Jitsi, paperless, to name a few. The Ten64 is handling all of them with ease.

Conclusion

The Ten64 is the ideal platform to implement a secure, self-hosted cloud. The eight-core LS1088 combined with up to 32 GB of DDR4 RAM provide sufficient resources even for demanding applications. The I/O-offloading and virtualization capabilities set it apart from similar platforms. The detailed documentation and the availability of all the sources make it fun to work and tamper with the Ten64. For anyone like me, looking for a secure way to decrease the number of physical devices wasting space and money, I can only recommend taking a look at the Ten64!